This April there was a historic event that was considered impossible to accomplish. That was the capture of an image of a Black Hole.

This was considered to be impossible since black holes are a region of space where the pull of gravity is so dense that nothing can escape, not even light particles, and without light particles it could only be seen as a black void. (Black holes are thought to be created when a massive star collapses into itself). Up to now, scientist had confirmed the presence of a blackholes by its gravitational effect on surrounding material and close by stars. So how were they able to get this image?

It was accomplished through the extreme use of DataOps.

A team of international astronomers set about to capture an image of a black hole’s silhouette. The challenge was to capture an image of the hot, glowing gas falling into a black hole from millions of light-years away. This team of international astronomers also included computer scientists to achieve this feat, improving upon an existing radio astronomy technique for high-resolution imaging and developing algorithms to develop images from very sparse data.

A planet-scale array of eight ground-based radio telescopes forged through international collaboration, called the Event Horizon Telescope (EHT) was designed to capture images of a black hole. The EHT links telescopes, located at high altitude locations, around the globe to form an Earth-sized virtual telescope with unprecedented sensitivity and resolution. Although the telescopes are not physically connected, they are able to synchronize their recorded data with atomic clocks which precisely time their observations. Each telescope produced enormous amounts of data — roughly 350 terabytes per day — which was stored on high performance hard drives and flown to highly specialized supercomputers — known as correlators — at the Max Planck Institute for Radio Astronomy and MIT Haystack Observatory to be combined. They were then painstakingly converted into an image using novel computational tools developed by the collaboration of astronomers and data scientists.

The problem for the data scientist was that the data was still very sparse. While the telescopes collected a massive amount of data, this was just a tiny sampling of the photons that were being emitted. Reconstructing the mage with such sparse data was a massive challenge. There were an infinite number of images that could match the data. Algorithms had to be built to sort through the plausible possibilities, cleansing and curating the data to put the puzzle together. This was a classical DataOps challenge.

Capturing this historic image, is just the beginning of the new innovations that will come out of this project. The algorithms that were used to develop this image will be very valuable for use in other applications, like analyzing MRIs. What is exciting is the possibilities of new applications that could be developed in the future, using these algorithms for reconstructing objects or concepts out of extremely spares data.

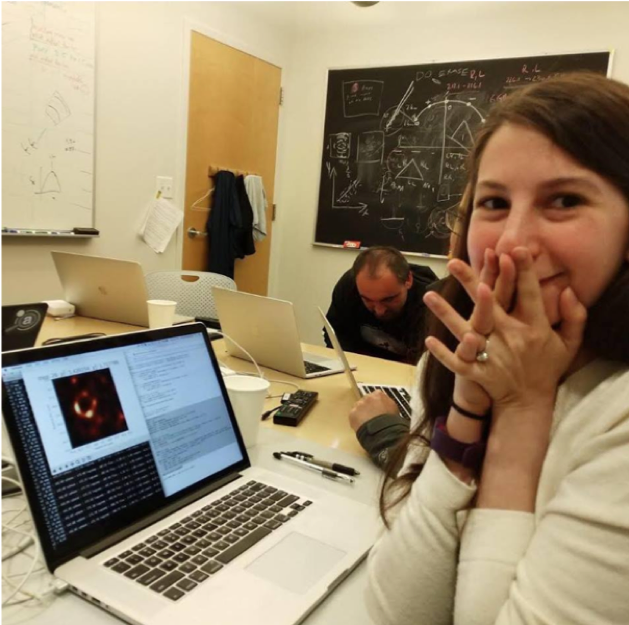

Another exciting thing about this project was the use of graduate students in this project. Can you imagine what these graduate students will be able to contribute as they carry this experience into their future careers? Here is a picture of one of those graduate students, Katie Bouman, who worked in MIT’s computer vision lab as part of a postdoctoral fellowship, collaborating on algorithm improvement across many different fields and computer vision applications. The picture was taken when she first saw the results of her efforts.